Struggling to find that elusive metadata in Azure CLI blob list command? You’re not alone! Let’s crack this mystery together in this quick blog post.

Problem with Default Blob List Command

When using the Azure CLI to list the contents of a blob container, you don’t get all of the fields returned that you may be interested in. For each blob entry, it looks as though some metadata is unexpectedly null.

In my case, I was looking for the status of the copy metadata.

For example, running:

az storage blob list `

--account-name $accountName `

--container-name $containerName `

--output jsonc

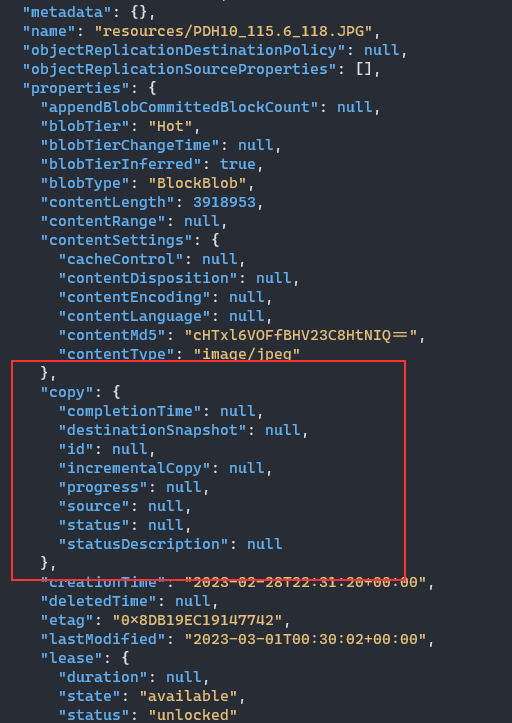

No copy information is returned. The copy property is present, but is simply null:

The file was copied from another account, and should have data in those fields.

Doing a show command on that blob instance will show that to be the case, but we want to do it in bulk for all blobs in the container.

Applying the --include Switch

To do that, you can use the --include switch.

--include

Specify one or more additional datasets to include in the response. Options include: (c)opy, (d)eleted, (m)etadata, (s)napshots, (v)ersions, (t)ags, (i)mmutabilitypolicy, (l)egalhold, (d)eletedwithversions. Can be combined.

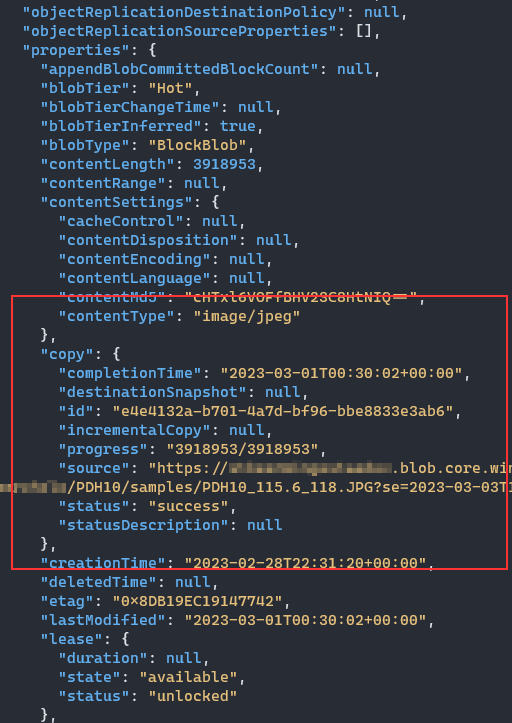

So by using the --include switch with a c, we can get the full copy metadata for each blob:

az storage blob list `

--account-name $accountName `

--container-name $containerName `

--include c `

--output jsonc

It’s About Efficiency

When you run the blob list command with the include switch, you’ll notice that it takes noticeably longer to execute.

By default, a typical user will not need these excluded items of data, so it makes sense to leave it absent. It’s the fact that the properties are null which can lead you astray.

The more include options you add, the longer the query will take to run. Only specify what you need.

Real-World Example: Handling Large File Transfers

This tip was useful in the a project which dealt with a lot of large files. When these files were copied in bulk from one storage account to another, a lot of files ended up with 0-bytes in the destination storage account.

The reason for this is that the SAS token used in the copy had expired before Azure had completed the copy (many files were 85GiB or larger!!). Finding all of the blobs in a container which had a “failed” copy status, was crucial in being able to initiate a re-copy.

az storage blob list `

--account-name $accountName `

--container-name $containerName `

--include c `

--output jsonc `

--query "[?properties.copy.status=='failed'].{name:name, copyStatusDescription: properties.copy.statusDescription}"

Using the JMESPath filter expression above, you can find the blob names which failed to copy, along with the reason why!

I hope this will be helpful for you one day.

As always, feel free to drop your thoughts or questions to me on twitter @matttester. Happy architecting!